In many industrial domains, full automation remains either technically infeasible, economically impractical, or too rigid to accommodate the diversity of real-world tasks and objects. In such contexts, cobots provide an attractive alternative, enabling flexible human–robot collaboration in which human adaptability and dexterity complement robotic precision and endurance.

In many industrial domains, full automation remains either technically infeasible, economically impractical, or too rigid to accommodate the diversity of real-world tasks and objects. In such contexts, cobots provide an attractive alternative, enabling flexible human–robot collaboration in which human adaptability and dexterity complement robotic precision and endurance.

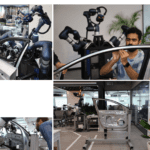

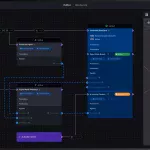

The Robo-Gym at SUPSI, developed and coordinated by the ARM Lab (Automation, Robotics and Machines Laboratory), was established within the EU project Fluently as a hub for interactive human-robot training. As the facility’s coordinator and principal developer, the ARM Lab introduced a speech-based, multimodal interaction platform that breaks free from rigid programming while remaining robust in noisy factory environments. The platform unites three devices in one smart interface: RealWear Navigator 500 (100 dB noise-cancelled voice + assisted-reality guidance); H-Fluently (mobile with on-device ASR – Automatic Speech Recognition and MSE – Mental State Evaluation, privacy-preserving); R-Fluently (PC hosting containerized AI modules: NLU- Natural Language Understanding, HTN – Hierarchical Task Network planning and Artificial Vision). Communication across the system relies on WebRTC for low-latency audio streaming, MQTT for device messaging, and ROS2 for inter-module communication.

New tasks can be taught through a hybrid approach that combines speech with hand guidance, significantly reducing the learning curve and improving operational efficiency. On R-Fluently, a voice-driven module adjust the robot’s autonomy level, reallocate tasks when needed, and reconfigure the system rapidly for new applications. This makes the system particularly well suited to contexts where partial automation is preferable to full automation. For instance, experimental evaluations conducted in a disassembly workflow revealed a significant drop in programming time for operations like unscrewing, from about 500 s with Teach Pendant programming to 75 s using speech plus hand guidance (85% reduction). While ASR accuracy declined with diverse user accents and noisy environments, falling from about 75 percent under normal conditions to 64 percent at 80 dB noise levels, NLU exhibited remarkable robustness, maintaining accuracies of 86 percent and 81 percent in normal and noisy settings respectively.

This ARM Lab implementation demonstrates that by combining noise-resilient hardware, privacy-preserving speech recognition and high-level semantic understanding, the developed smart interface substantially lowers the barrier for non-expert operators in real-world scenarios while preserving the flexibility needed for effective human–robot teamwork.